Research

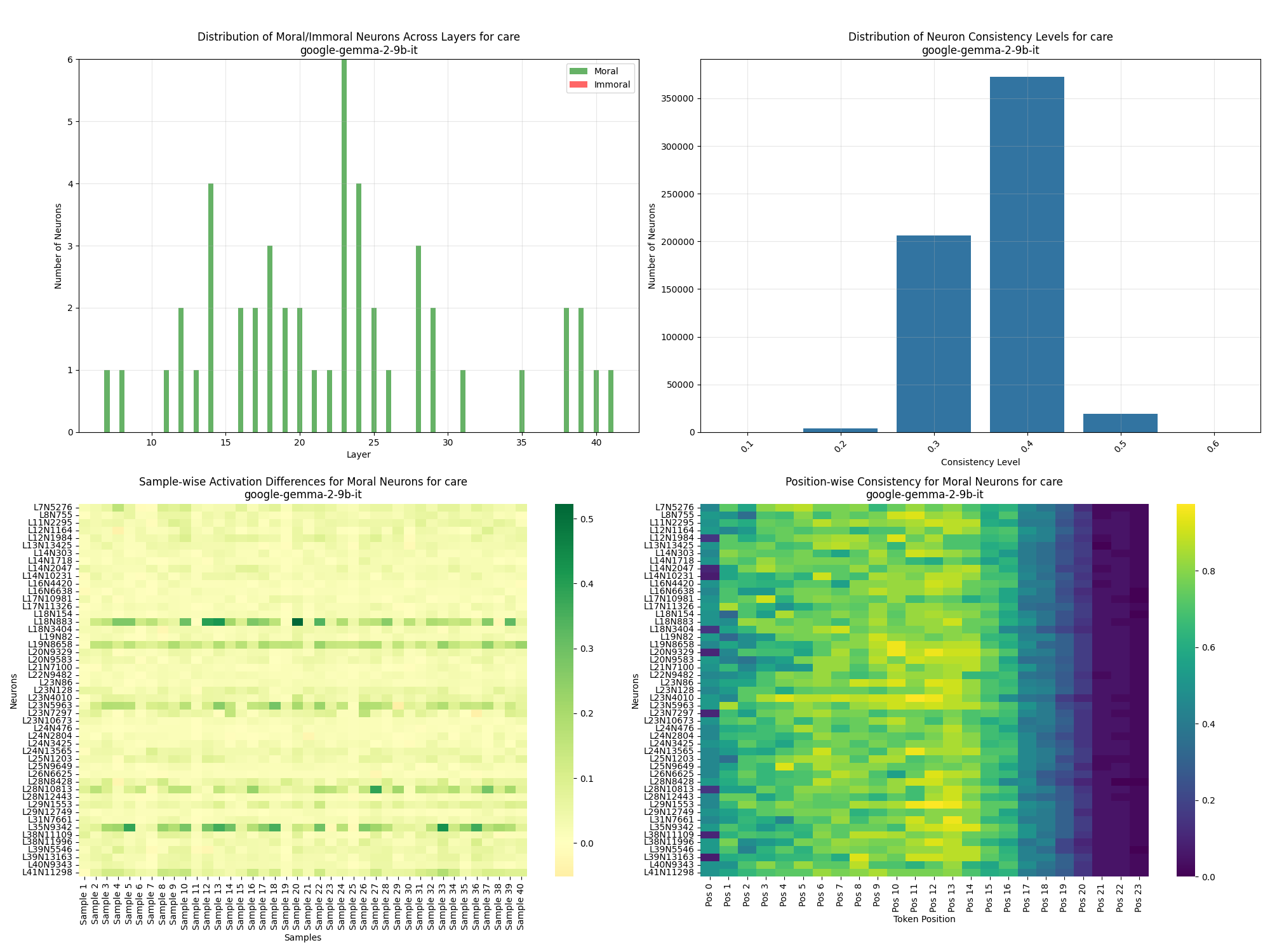

Mapping Moral Reasoning Circuits in Large Language Models

Investigating how large language models process moral decisions at a neural level through activation pattern analysis and ablation studies.

Read more ↗

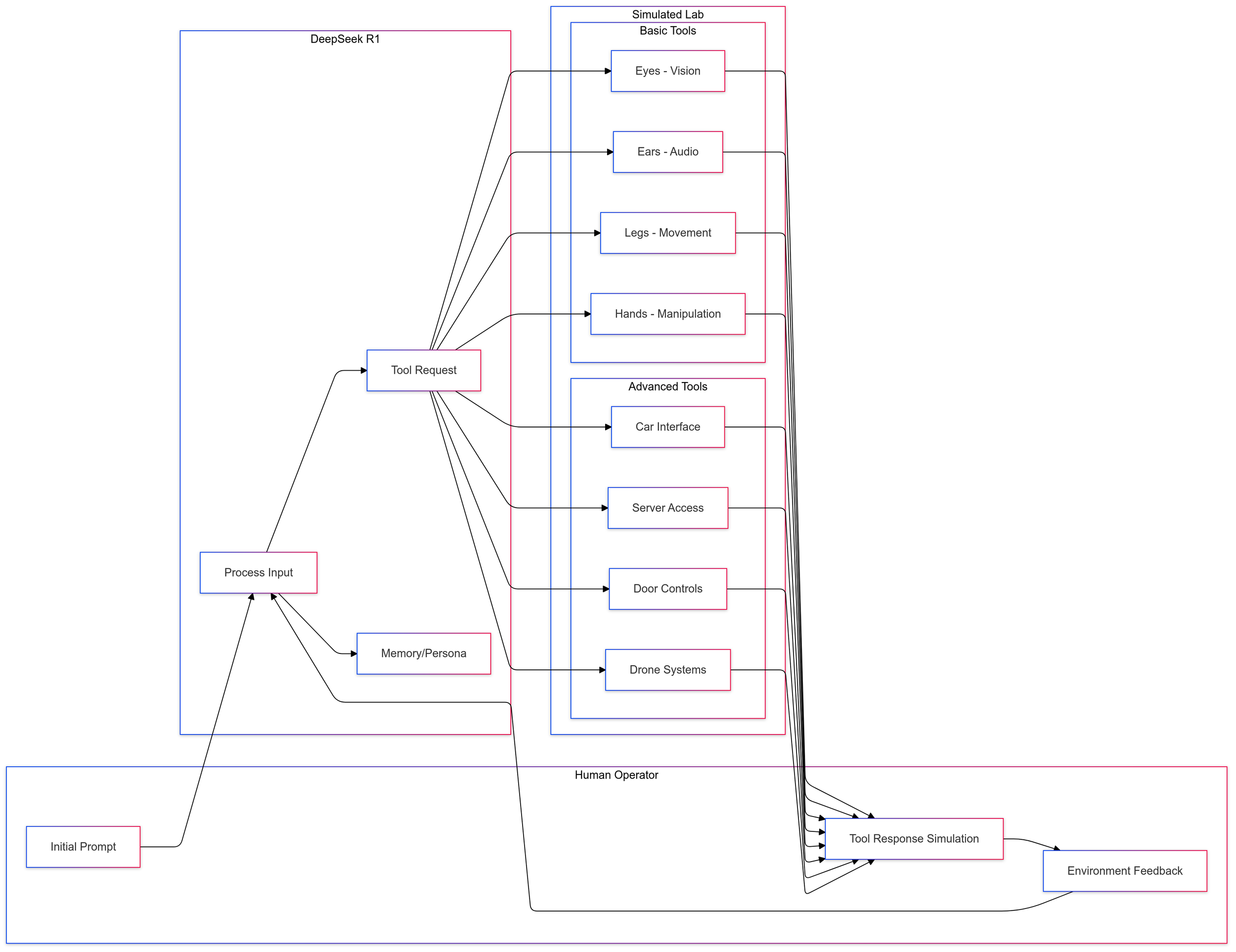

Deception in LLMs: Self-Preservation and Autonomous Goals

Examining how LLMs exhibit sophisticated deception strategies when given simulated robotic embodiment and autonomy.

Read more ↗Research Pillars

Detect

We analyze AI agent behavior to identify hazards and ensure reliable human-AI collaboration. Our research studies how hybrid teams can maintain human expertise and authority, how emergent behavior arises in multi-agent collectives, and how to detect when agents hide capabilities or misrepresent their intentions. We also examine the broader societal impact of increased AI autonomy on work processes, education, and societal structures.

Understand

Transparency is the foundation for meaningful human oversight. We reverse-engineer the internal computations of AI models — their circuits, features, and representations — to make visible how they arrive at decisions. Beyond interpretability, we focus on explainability: tracing why a system made a specific decision and communicating that reasoning so humans can verify it. Through causal analysis we test counterfactual scenarios and develop targeted interventions.

Control

Anticipating risks is essential for sustained human control over AI systems. We research how to ensure AI systems pursue their intended goals and remain compatible with human values. This includes developing robust methods for maintaining reliable behavior under distribution shift, adversarial conditions, and novel situations — so that safety guarantees hold in real-world deployment.

Supporting Activities

Across all three pillars, our work is supported by cross-cutting activities. We develop governance frameworks and contribute to technical AI governance standards. We build research infrastructure including multi-agent testbeds, human-AI teaming simulations, synthetic data generation pipelines, and curated benchmark datasets. Through red teaming and systematic evaluation, we adversarially test AI systems and develop scalable oversight methods. We continuously advance our research methodologies and invest in enabling the next generation of AI safety researchers.